I've spent years pattern-matching across startups, digging through founder trajectories, and watching ecosystems evolve. But nothing crystallizes the proximity advantage quite like watching the current AI wave unfold in San Francisco. If you're an AI founder operating outside the Bay Area right now, I'll cut to the chase: you're likely working with information that's 6 to 12 months behind what the top practitioners already know. That's not speculation—it's a measurable information lag that shows up in research adoption patterns, model access timelines, and the velocity of knowledge transfer through dense networks.

Let me explain why this matters and what you can do about it.

The Psychology Isn't Vibes—It's Data

The "you become who you surround yourself with" principle isn't motivational poster material—it's backed by serious research. Studies tracking thousands of people show that simply sitting next to someone increases friendship probability from 15% to 22%. Harvard psychologist David McClelland put a number on it: the people you habitually associate with determine as much as 95% of your success or failure.

In tech, this compounds fast. Behaviors spread through networks like viruses—when everyone around you is raising big rounds and thinking in 10x terms, that recalibrates your entire operating system. When your coffee-shop neighbor just closed a Series A and your gym buddy is scaling to 100 engineers, mediocrity stops being an option.

The 12-Month Knowledge Lag: Not a Theory, a Pattern

Here's where it gets concrete. Analysis of AI research publications between 2000-2010 revealed that China's research topics systematically lagged the U.S. by several years. Despite massive investment and eventually matching publication volume, China's choice of research topics more closely resembled what the U.S. was working on in previous years than the current year.

This isn't about capability—it's about information flow architecture. The U.S., and specifically the Bay Area, sets the agenda. Everyone else follows with delay.

Now layer on the insider advantage. OpenAI and Anthropic provide early access to new models for select groups—sometimes 6 to 12 months before public release. Recent reports indicate OpenAI employees have been testing GPT-5 capabilities internally while the rest of the world is still optimizing for GPT-4. Anthropic runs similar beta programs with hand-picked customers—GitLab, Midjourney, Menlo Ventures—who get to build on capabilities that won't be widely available for months.

Translation: while you're reading the release notes, insiders already shipped v2 of the thing you're just starting to prototype.

I Watched This Play Out at YC Demo Day

Earlier this year I attended YC's S25 Demo Day at their Dogpatch HQ in San Francisco. One minute, one slide, 150+ companies—most of them AI-native. What struck me wasn't just the quality (though 92% being AI-focused is wild). It was the velocity of information exchange.

Between pitches, I grabbed tacos from the food trucks and ended up in a 10-minute conversation with a founder who casually mentioned they'd been testing an unreleased model variant for two months. Another founder referenced a research technique I wouldn't see published until weeks later. These weren't secrets—they were just the baseline of what's considered "current" when you're embedded in the ecosystem.

That's the gap. It's not dramatic; it's cumulative and silent.

SF Is Where the Information Density Peaks

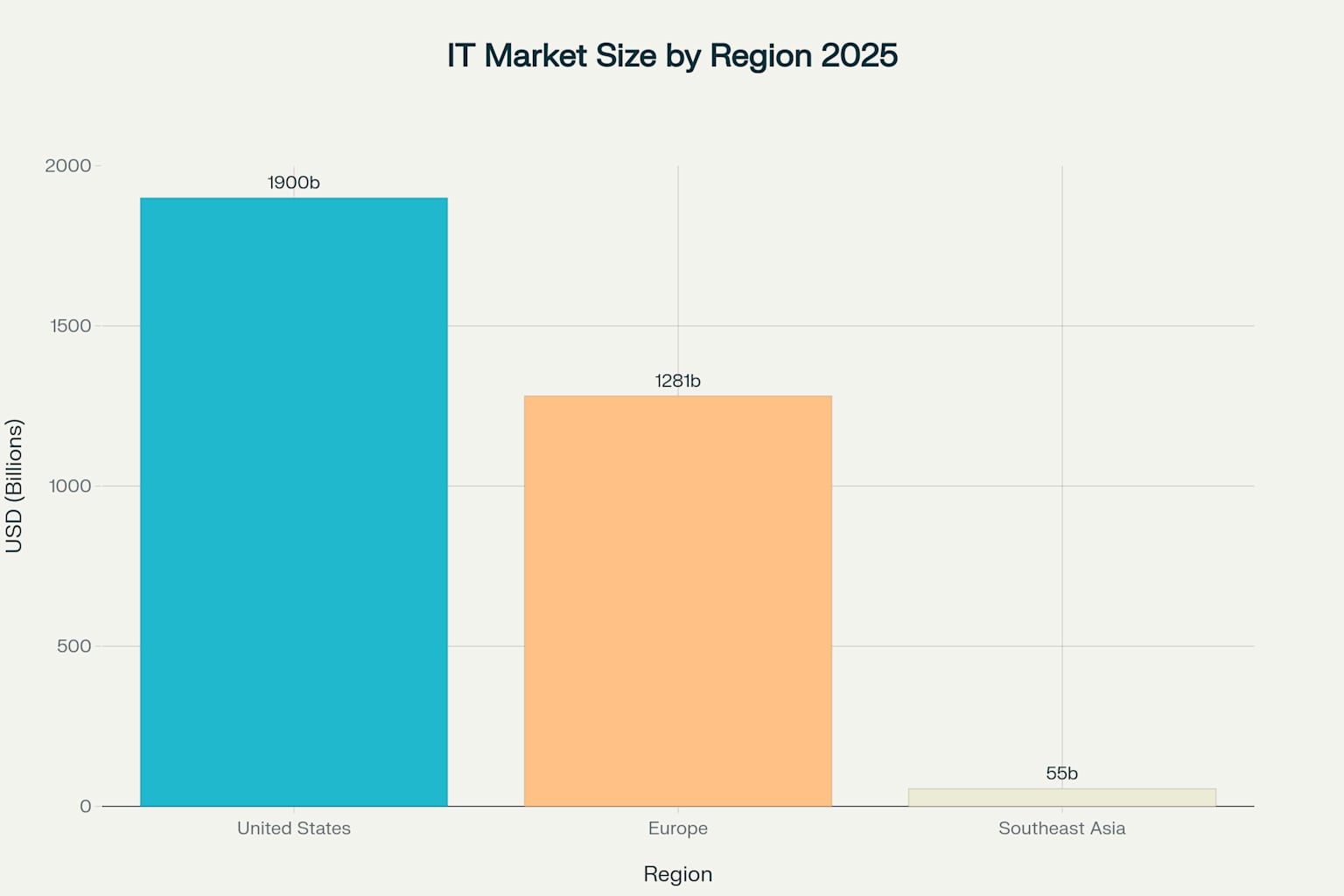

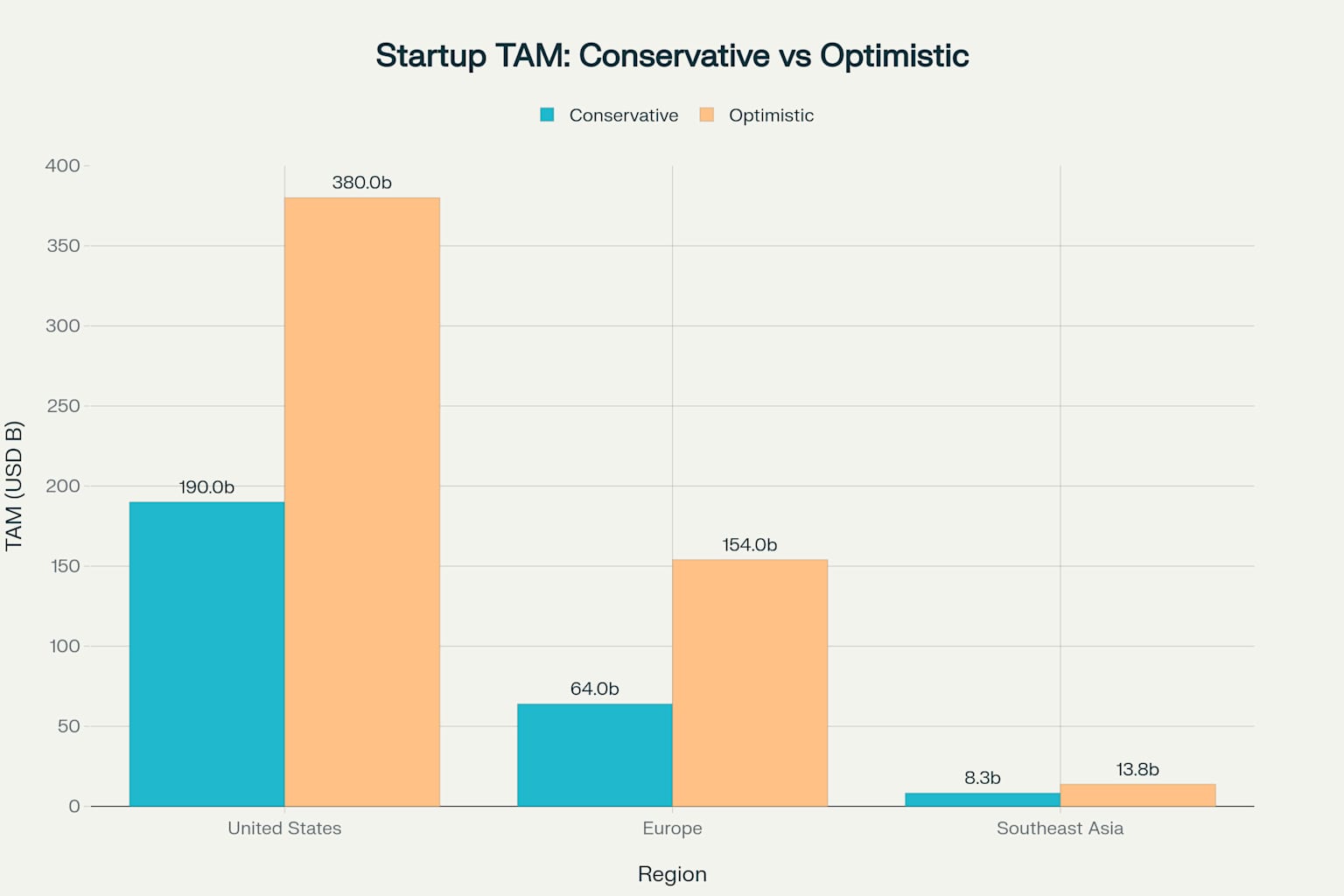

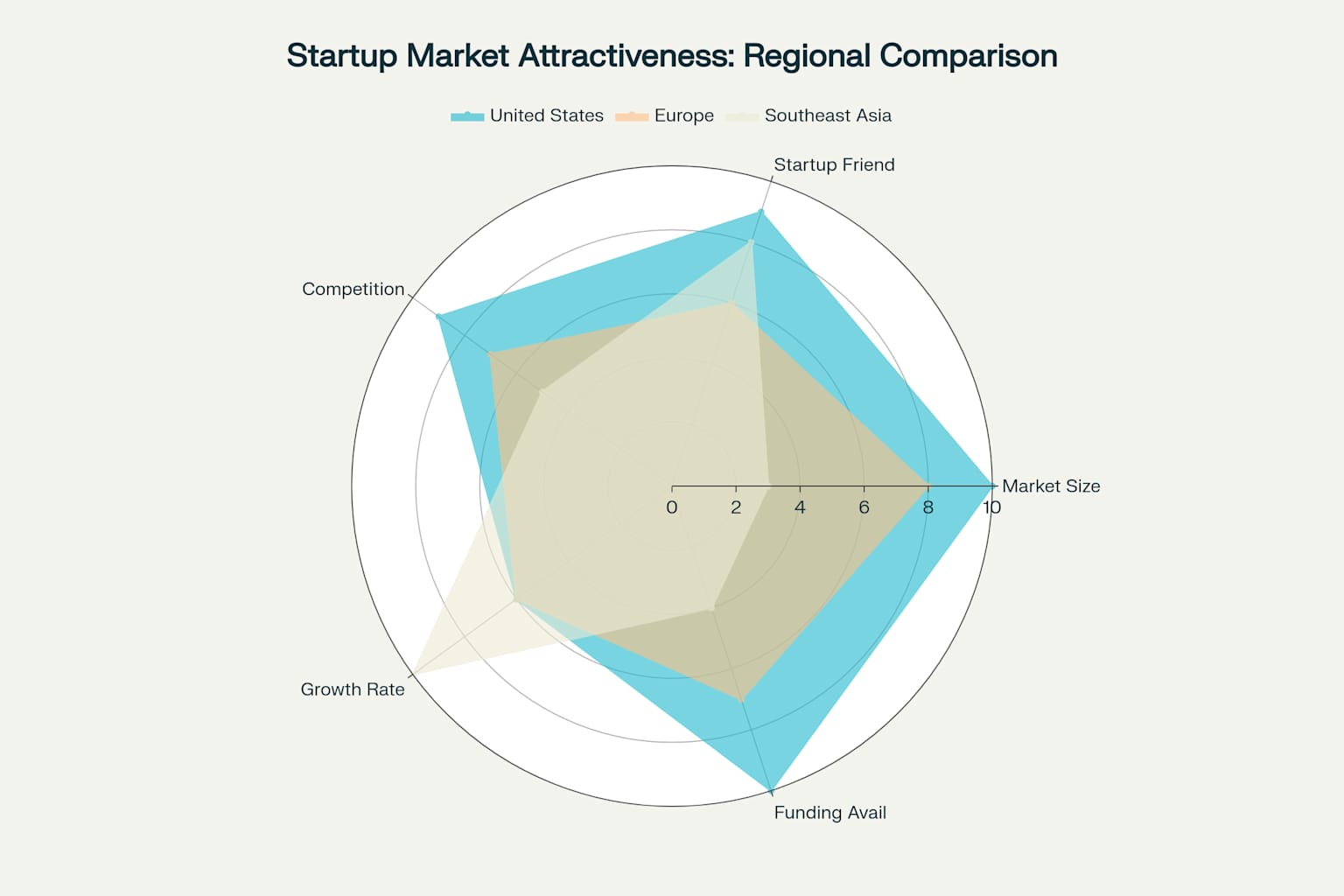

The numbers back up the anecdote. San Francisco pulled in over $29 billion in AI venture funding in the first half of 2025 alone—more than double the previous year and vastly outpacing every other city globally. Nearly 50% of all Big Tech engineers and 27% of startup engineers live in the Bay Area. OpenAI signed 500,000 square feet of office space and is hunting for more. Anthropic, Pika, Character.AI, and dozens of unicorns operate within walking distance.

This isn't just about talent density—it's about information flow velocity. One AI observer I follow mentioned attending an average of three AI-focused events per week in the Valley. Monthly Silicon Valley meetups on GenAI, LLMs, and agents pack rooms with founders, researchers, and VCs. That's 12+ high-bandwidth information exchanges per month, multiplied across thousands of participants. Lu.ma and X.com are bibles around here.

Knowledge doesn't spread through press releases—it spreads through repeated, high-trust, face-to-face interactions. The famous Allen Curve proves it mathematically: communication frequency drops exponentially with distance. In practice, this means a 30-minute coffee in SF with someone from DeepMind or Anthropic can shift your product roadmap in ways a dozen Zoom calls never will.

The Problem-Solving Velocity Nobody Talks About

Here's something that doesn't show up in funding announcements but matters enormously: the speed at which you can solve technical and operational problems in SF is orders of magnitude faster than anywhere else.

The Bay Area concentrates 35% of all AI engineers in the United States—Seattle, the second-densest hub, has only 23%. But it's not just the raw numbers; it's the depth and diversity of expertise. Your angel investors aren't just capital allocators—many are former CTOs who've debugged distributed systems at scale. Your advisors have shipped ML models in production at Google, Meta, or Anthropic. Your neighbor in the coworking space solved the exact infrastructure bottleneck you're hitting right now.

I've seen this play out repeatedly. A founder hits a gnarly RLHF training issue on Thursday afternoon, texts an advisor who used to run safety at a LLM unicorn, and by Friday morning has three potential solutions plus an intro to someone at Hugging Face who's dealt with the exact edge case. That 18-hour turnaround doesn't exist in other ecosystems—not because the expertise doesn't exist elsewhere, but because the density and accessibility of that expertise is unmatched.

Corporate VCs operating in the Bay now provide active mentorship, market access, and infrastructure resources beyond just checks. When you're stuck on a technical decision—whether to fine-tune versus RAG, how to architect your agent orchestration layer, which inference provider to use—you're not Googling or posting in Discord. You're texting someone who's already made that exact decision at scale and lived with the consequences.

The operational side is equally compressed. Hiring your first head of sales? Your investors can intro you to three candidates by Monday who've scaled GTM at AI companies. Need to navigate SOC 2 compliance? Someone in your YC batch just went through it and will walk you through the checklist over coffee. Fundraising strategy? Your advisor literally closed a $50M Series B last quarter and knows exactly what metrics Sequoia is asking for right now.

This isn't networking—it's operational infrastructure disguised as relationships. And it only works at this velocity when everyone is physically close enough for spontaneous problem-solving.

Real Founders Are Making the Move—And Saying It Out Loud

The migration patterns tell the story. AI founders from Canada, Europe, Asia, and Latin America are relocating to SF in unprecedented numbers. Indian VCs like Elevation Capital and Peak XV are opening SF offices specifically to stay close to AI developments.

Emmanuel Martes moved his fintech startup from Bogotá to San Francisco and captured it perfectly: "Everywhere else, you're a weird person who wants to start a company. Here, everyone is building".

Ben Su, a Canadian entrepreneur building an AI lawyer, explained his move: "We're hitting the ceiling in Canada, and the mecca of the startup world is in San Francisco". While Canada raised less than $5 billion across all startups, the Bay Area alone pulled $27+ billion in AI funding.

The NYT recently profiled the wave of 20-something founders flooding SF—many dropping out of MIT, Georgetown, and Stanford specifically to be in the city during the AI boom. Jaspar Carmichael-Jack moved to SF, built Artisan AI, and scaled it past $35 million in valuation. Brendan Foody left Georgetown at 19, raised millions for Delv, and is now hiring dozens in the Arena district near OpenAI's headquarters. These founders didn't just relocate geographically—they relocated into an operational support system that accelerates everything.

The pattern is clear: ambitious founders are voting with their feet because proximity compresses time.

The Compound Advantages Are Structural

When you're in SF, several things happen simultaneously:

Early Model Access: Companies like OpenAI run early access programs for safety researchers and select partners. If you're local and networked, you're in the room when capabilities get previewed. That's a 6-12 month product development advantage over teams working with publicly available tools.

Conference Intel: Major AI conferences like NeurIPS and ICML create informal knowledge-sharing loops around presentations and workshops. Attendees get advance briefings, hallway demos, and pre-publication insights that never make it into the proceedings. Being there in person means absorbing trends months before they're documented.

Talent Movement Signals: When a key DeepMind researcher joins Anthropic or an OpenAI engineer spins out a new company, the implications are immediately obvious to insiders. You hear about pivots, technical breakthroughs, and capability jumps through informal networks before they're announced publicly.

VC Intelligence Networks: Bay Area VCs don't just write checks—they aggregate intelligence across dozens of portfolio companies. When Sequoia or a16z share pattern recognition about emerging trends, they're synthesizing confidential data from hundreds of startups. That intelligence doesn't exist in other ecosystems.

Face-to-Face Conversion Rates: Research shows face-to-face requests are 34 times more successful than email. For fundraising, recruiting, and partnerships, being in the room isn't a nice-to-have—it's the difference between a warm intro and a cold outbound.

Expert Problem-Solving Speed: With 50% of Big Tech engineers concentrated in the Bay Area, the time between "we're stuck" and "here's how to fix it" collapses from weeks to hours. Your advisors and investors aren't just cheerleaders—they're active debugging partners who've already solved your exact problem.

The Cost of Distance

While remote work democratized access to global talent, it also revealed the irreplaceable value of physical proximity. Virtual collaboration tools cannot replicate the spontaneous interactions that drive innovation. The most breakthrough ideas often emerge from unplanned conversations—the coffee shop encounter that becomes a partnership, the hackathon that spawns a unicorn, the demo day that attracts unexpected investors.

77% of employees who work remotely show increased productivity in routine tasks, but innovation requires the serendipity that only physical proximity provides. When groundbreaking AI research is being discussed in San Francisco coffee shops and exclusive invite-only dinners, remote founders miss critical insight and opportunity.

The knowledge lag compounds over time. Research shows that informal knowledge-sharing mechanisms—professional networking events, mentorship programs, and casual interactions—are critical drivers of AI innovation. When these interactions are geographically concentrated, outsiders operate with systematically outdated information that affects fundamental business decisions.

More critically, when you hit a technical wall at 11pm and need someone who's debugged transformer architecture issues at production scale, the difference between texting an advisor two blocks away versus posting in a Slack channel with global time zones can mean the difference between shipping Monday or next month.

What This Means If You're Building

The playbook isn't complicated, but it requires commitment:

Establish a Physical Presence: You don't need to move your entire team overnight, but having founders and key decision-makers in SF for sustained periods is non-negotiable. Aim for 6-12 week sprints aligned to model release cycles, major conferences, and fundraising windows.

Default to In-Person for High-Stakes Interactions: Investor pitches, lighthouse customer meetings, senior IC recruiting—do these face-to-face whenever possible. The conversion delta compounds over quarters.

Build a Local Advisory Lattice of Technical Operators: Surround yourself with practitioners who are one hop from frontier labs, leading research groups, or policy/safety desks. Prioritize advisors and angels who've actually built and scaled AI systems in production—their ability to help you debug architectural decisions or navigate technical tradeoffs in real-time is worth more than their capital.

Prioritize Networks Over Newsfeeds: The most valuable information never hits TechCrunch. It spreads through meetups, invite-only dinners, hackathons, and coffee chats. Treat your calendar like an operating system—weekly office hours with VCs, monthly customer deep-dives, quarterly recalibrations based on new model capabilities.

Weaponize Geographic Proximity for Speed: When you're blocked on a technical decision, use the density advantage. Text an advisor, grab coffee with a portfolio founder who's been there, or walk into an investor's office with your laptop open. The 18-hour problem-solving loop only exists in SF.

Bottom Line

The proximity principle that governs friendships also governs information access and problem-solving velocity, and in AI, both timing and execution speed are everything. San Francisco remains the highest-signal, highest-leverage surface area in the world for AI—not because of weather or culture, but because knowledge flows 6-12 months ahead of everywhere else and technical problem-solving happens at 10x speed.

If you're serious about building a category-defining AI company, the math is simple: surround yourself with the best, plug into the densest information networks, compress the feedback loop between idea and execution, and tap into the collective technical expertise that can unblock you in hours instead of weeks. That happens in one place right now, and it's not over Zoom.

The future belongs to founders bold enough to position themselves at its center. For AI in 2025, that center is unquestionably San Francisco—where proximity to greatness, exclusive access to tomorrow's breakthroughs, and instant access to world-class problem-solvers becomes the catalyst for extraordinary achievement.